Breaking a Creativity World Record with a Genetic Algorithm

In this post, we’ll take a look at a creativity quiz and game the crap out of it with computer science.

Feel free to skip to the TL;DR section!

Setting The Scene

One October day, I got a Discord ping from my partner. I checked my phone to find a link to a quiz that advertised itself as a “fast creativity test” and a way to measure “verbal creativity”. Intrigued, I clicked through and read the rules. The premise is simple enough: write 10 words that are as unrelated to each other as possible. Knowing that we’d inevitably compare scores, I knew I had to focus up. Go ahead and give it a go before reading on! It only takes a couple of minutes.

I ended up getting beat 90.8 to 97.56. It was clear I once again needed to call on computers to eliminate my human shortcomings. Surely computers can be “creative” if you can define exactly what creativity should look like. In most cases that might be an oxymoron, but not in this case, since we have exact metric we can optimize for.

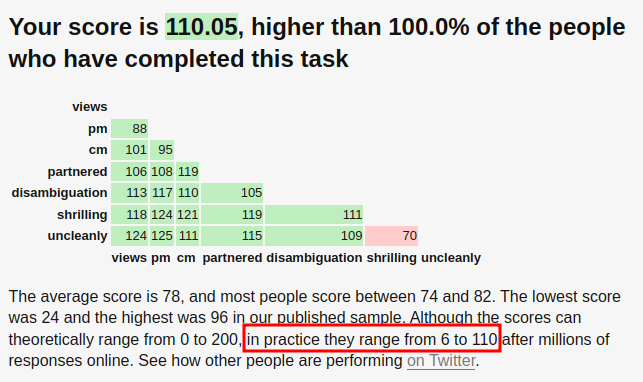

After you complete a quiz, the results page states that “[the scores] range from 6 to 110 after millions of responses online”. That means that 110 is the number to beat for a world record!

Quiz Scoring

The quiz works by first mapping your words into their word embeddings that were trained from Stanford’s GloVe project. The score that the quiz returns is the average cosine distance between every pair of vectors, multiplied by 100. The grading code (and allowed word list) can be found here. You can download the word embeddings from here.

For readers who are new to word embeddings, think about them as a way to map from a word to a vector. Vectors that point in similar directions (small cosine distance) are more related to each other semantically, like “dog” and “cat”. Vectors that point in opposite directions (maximal cosine distance) are less related to each other semantically, like “gerontology” and “yellow”.

NP-Hard

Maximizing the average distance between a collection of vectors/points over some distance function is known as the Maximum Diversity Problem and is NP-Hard. There are several papers that describe techniques for getting decent solutions to this problem (1, 2), but I couldn’t find code for any technique. Looks like we’re going to roll our own!

Greedy Approach

My first approach was a greedy algorithm:

- Choose a random starting word

- Add word to collection

- Iterate over every remaining word in the dictionary and calculate the score if we added this word to our collection

- Choose the word that maximizes the score

- Repeat from step 2 until we have 7 words.

- Repeat from step 1, keeping the best collection of words we’ve found so far.

Why 7 words? A key observation is that the quiz only scores the first seven valid words of the ten submitted. That way, if you make a typo or accidentally duplicate a word, your score won’t be affected. This is great news for us, since it reduces our problem size.

The greedy algorithm didn’t perform well. It was scoring around 90 (95th percentile), even after filtering out all words with less than 8 characters. We have to step it up.

Genetic Algorithm

I had read in the past about genetic algorithms being successful in finding approximations for discrete optimization problems. For example, check out this Computerphile video on how you could apply a genetic algorithm to get an approximation for the knapsack problem. It’s important to note that genetic algorithms won’t necessarily get you the optimal solution.

Not wanting to implement the genetic algorithm from scratch, I looked for Python frameworks and found pymoo. pymoo is a great fit for this problem since it features a genetic algorithm implementation with an example I barely had to modify to adapt it to my problem.

I simply declared out['F'], the function I was minimizing, and out['G'], the constraints I was imposing, and let pymoo do the rest.

Specifically,

# Minimize the negative average distance, hence maximizing the average distance

out['F'] = -self._calculate_average_distance(x)

# Ensure that we have exactly 7 words

out['G'] = (self.subset_size - np.sum(x)) ** 2

My full implementation for a generic maximum diversity problem solver using pymoo can be found here.

Results

I ran several instances of the solver simultaneously for about a week or so on my Ryzen 7 3800X. The results were in; how did it do? See for yourself!

I can hear you, the reader, start to say, “those words aren’t valid nouns!” Or maybe you were starting to say “110.05 is so close to 110; how do you know they didn’t round something higher down to 110?” I’m very sorry to say that I can’t hear you over me yelling WOOOOOOOOOOOOOOORLD RECOOOOOOOOOOOOORD!

Conclusion

I love pouring disproportionate amounts of engineering effort into solving problems that don’t matter. Just one laugh and an eye roll makes the entire process worth it to me. Can you beat my score? I’d love to hear about it if so! Mention me on Twitter @0x1337cafe with your results.

TL;DR

- Do a human attempt of a creativity quiz, get score of 90.8. The score to beat is 110.

- Quiz is scored by converting your first 7 valid words into their word embeddings, and then calculating the average cosine distance between every pair of vectors

- Maximizing this score is known as the Maximum Diversity Problem and is NP-Hard

- Try a greedy algorithm, get average score of around 90

- Use pymoo to implement a genetic algorithm and barely squeeze out a “world record” score of 110.05